🔙 Circuitbox: How to Circuit Breaker in Ruby

A few years ago, I made a post called Hystrix: from creating resilient applications to monitoring metrics where I talked about avoiding catastrophes with circuit breakers in Java and monitoring our applications using Prometheus and Grafana.

This post focused on using Circuitbox, a Ruby gem for creating circuit breakers.

If you are not keen to read now then you can watch the video version of this post with extended examples that is available below.

Service to service communication

When building some service, it is not uncommon that we would do remote calls to APIs to perform some action. These points of connection to external services turn to be points of failure on our applications.

Downstream services help us to achieve our goals, but the more critical is that service, the more significant the impact of its failure will generate and propagate in the platform.

If any external API stops working, would your application still operate?

Circuit Breakers ⚡️

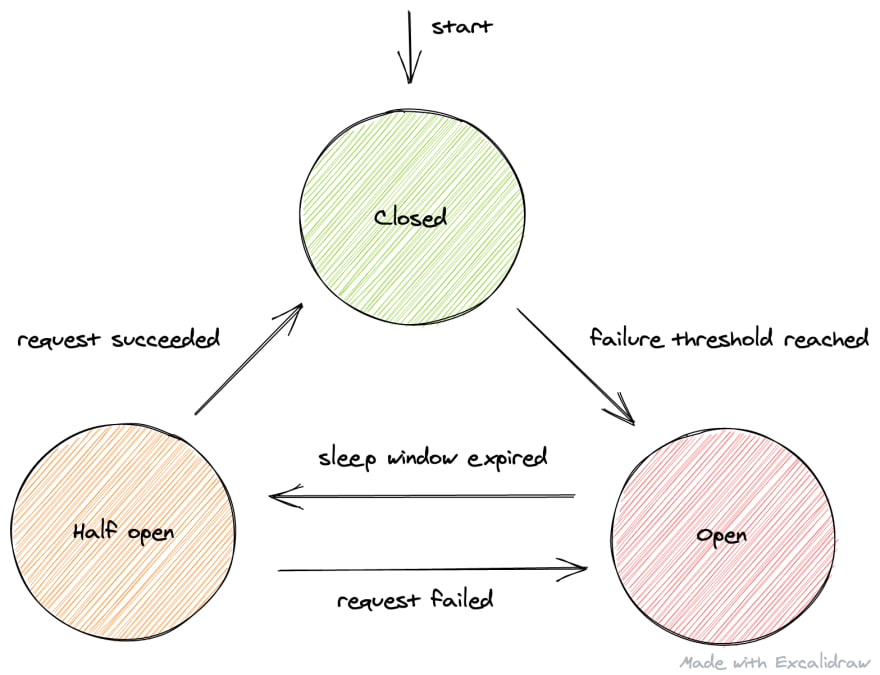

Circuit Breaker is a software design pattern that proposes the monitoring of the remote calls failures, so when it reaches a certain threshold, it "opens the circuit" forwarding all the calls to an elegant and alternative flux. Handling the error gracefully and/or providing a default behaviour for the feature in case of failure.

The pattern states a time window on what it will request that service again to check its health. When the downstream API start responding accordingly under the same thresholds, then the circuit will close and enable the main flow again.

The intention behind circuit breakers is to avoid pursuing a failure service if we already know that it is unstable and not reliable at the moment. We then can decide what to do and keep our systems operating or partially operating.

Some use cases

Fail fast: We don't wait for a timeout if we know it won't result in success.

Partially fail: When we have an option to continue with a different response from the ideal.

Best effort: We try to call the other service, but we don't care about the result, eg. Non-critical analytics.

Hands-on 🙌

What would that look to use a circuit breaker in Ruby?

The following exercise code is available on GitHub at circuitbox-example. There we have a node server and a Rails application. The node server will be the downstream service consumed by our main Rails application.

Simulating a failure ⚠️

To simulate a downstream service, we have the target-server, an express server with only the index route (/). We will be querying it from our main application.

app.get("/", function (req, res) {

const delay = req.query.speed === "fast" ? 50 : 200;

setTimeout(function () {

res.sendStatus(200);

}, delay);

});

To facilitate our experiments, we are going to pass in the query string a parameter called speed. We'll use that parameter to simulate a slow or a fast network response.

Whenever it receives speed as fast, then the server will delay the answer by 50ms. If it gets anything else, it will delay the reply by 200ms.

The consumer 💻

In our Rails application, I've created an index route as well. It will have the same parameter as target-server that will be passed down to the remote call.

We are going to perform ten calls in sequence to our downstream service and return all the answers at once. The following code will do the work for us:

def index

speed = params[:speed]

answers = Array(0..9).map do |i|

sleep(1)

puts "Request number #{i+1}"

HTTP.get("http://localhost:4000/?speed=#{speed}").status

end

render json: answers

end

We take the speed parameter, and then we map through an array of size ten doing a call for every element passing down our query param. We delay every call by 1 second on purpose, so later we can tweak the circuit configuration and see different results. Finally, we render the answers array with all the responses from our API.

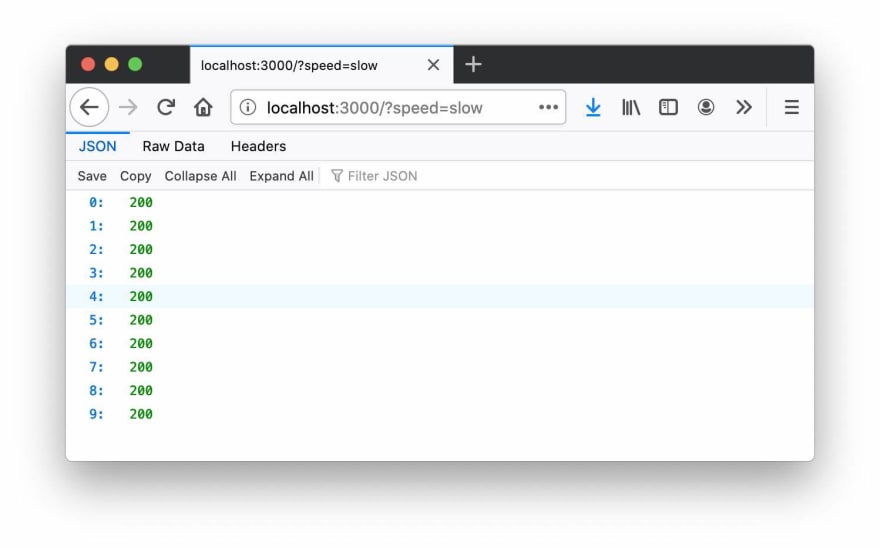

The app is hitting the express server, that looks great! 🚀. Even though the requests are "slow" they are successful as we have not set any timeouts.

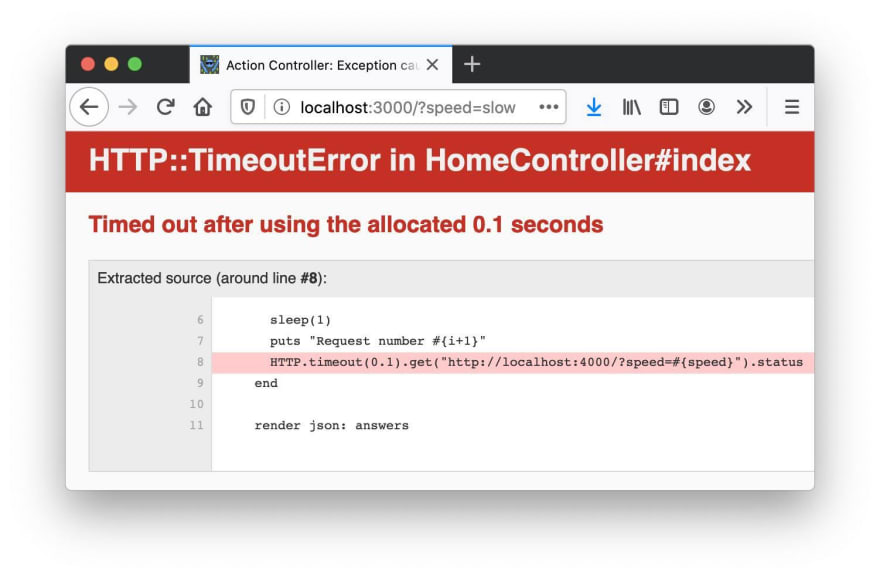

Changing the remote call to HTTP.timeout(0.1).get will set the timeout as 100ms and then we should see an error as the API is delaying the response by 200ms.

And voila ✨ we got our exception!

Let's keep that HTTP::TimeoutError in a note because we are going to need it later.

Adding the Circuitbox 📦

Circuitbox, a Ruby gem for creating circuit breakers.

"It wraps calls to external services and monitors for failures in one minute intervals. Once more than 10 requests have been made with a 50% failure rate, Circuitbox stops sending requests to that failing service for one minute. That helps your application gracefully degrade."

We can add that in our Gemfile as follows:

gem 'circuitbox', '~> 1.1', '>= 1.1.1'

To utilise the circuit breaker, we need to instantiate a new Circuitbox class passing at least two arguments: the server/action name and an array of exceptions.

The exceptions collection is there for counting failures, so if we get an error that is not on that collection, it will not count towards the circuit threshold. Do you remember that HTTP::TimeoutError? We are going to use it now 😁.

class TargetClient

def initialize(speed:)

@speed = speed

@circuit = ::Circuitbox.circuit(:target_server, exceptions: [HTTP::TimeoutError])

end

attr_reader :speed, :circuit

def call

circuit.run(circuitbox_exceptions: false) do

HTTP.timeout(0.1).get("http://localhost:4000/?speed=#{speed}").status

end

end

end

We can create a TargetClient class that will encapsulate that logic of creating the circuit breaker and making the request. On initialise, it receives and saves the speed parameter and creates the circuit assigning the target_server name and the exception we want to track.

def index

speed = params[:speed]

answers = Array(0..9).map do |i|

sleep(1)

puts "Request number #{i+1}"

TargetClient.new(speed: speed).call

end

render json: answers

end

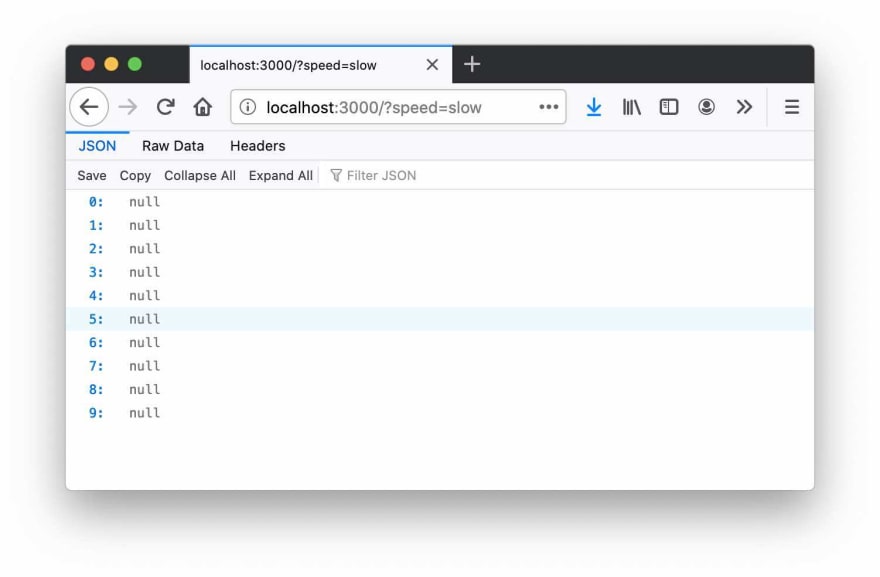

By default, the circuit will return nil for failed requests and open circuits. Then if we hit the endpoint again with speed=slow, we should expect all the answers to be nil, but no exception is thrown.

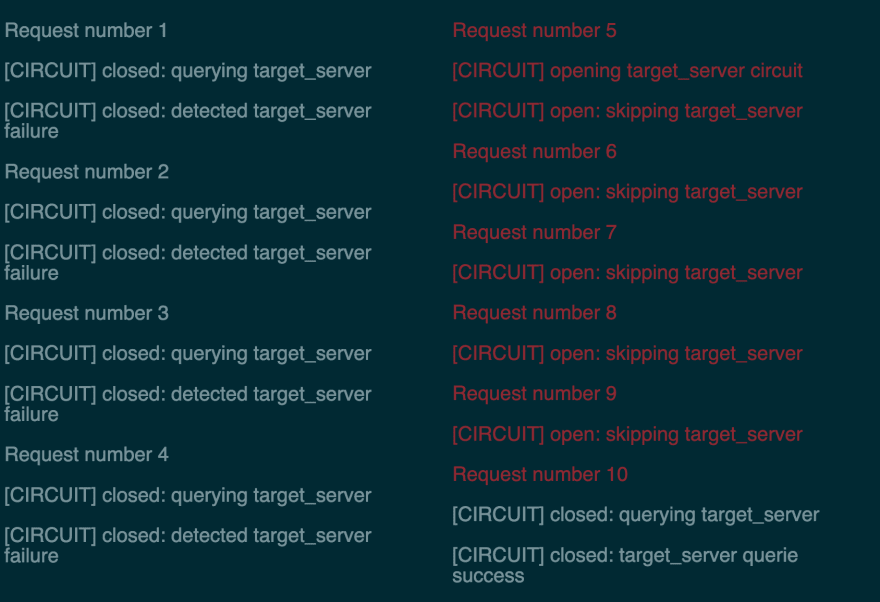

And that is what happens 🥳! Because we gave a name to the circuit, the gem can track down the requests across all the instances of it. Another cool thing it does by default is to log the status of the call and whether or not the circuit is open.

The first message indicates that we are making the request. The second one exposes the status of the request (as a failure in this case). Although all the applications failed, the circuit remained closed because we are no hitting the threshold necessary to open it.

Tweaking configs ⚙️

Circuitbox provides a set of configuration we can change to fine-tune its shape and make that fit in our needs. Some of them are:

-

sleep_window: seconds the circuit stays open once it has passed the error threshold. Defaults to300. -

time_window: length of the interval (in seconds) over which it calculates the error rate. Defaults to60. -

volume_threshold: number of requests withintime_windowseconds before it calculates error rates. Defaults to10. -

error_threshold: exceeding this rate will open the circuit (checked on failures). Defaults to50%.

Knowing that default configuration, we can conclude that the circuit will open if within 60 seconds we get at least 10 requests and 50% of them fail. For the sake of the exercise, we can rearrange that numbers so we can force the circuit to open and see the results.

@circuit = ::Circuitbox.circuit(

:target_server,

exceptions: [HTTP::TimeoutError],

time_window: 5,

volume_threshold: 5,

)

Making both the time_window and the volume_threshold to 5 will lead to open the circuit when we get at least 5 requests during 5 seconds, and 50% of them fail. We then can try again hitting our app with /?speed=slow.

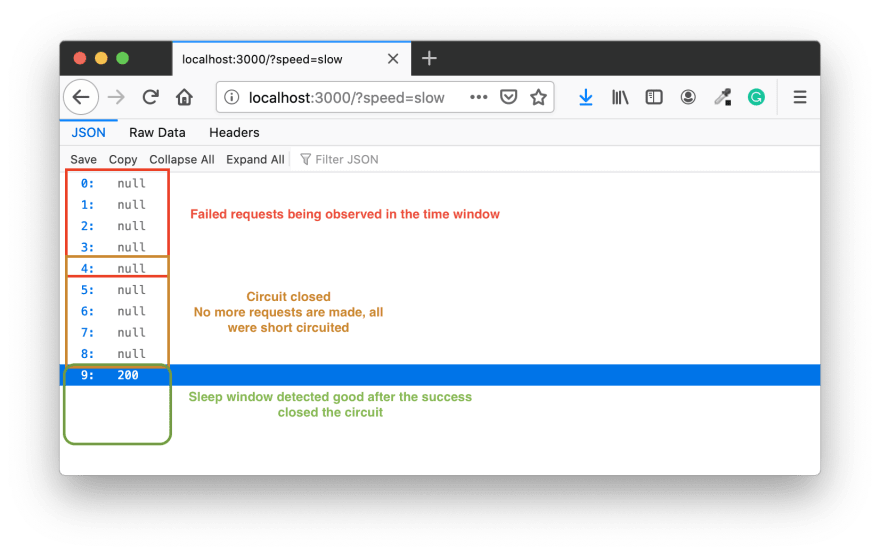

These are the logs after executing it again. If we were to look at the actual response of the Rails app, we would not notice anything different; the answers would be all nil again. However, there is some cool stuff going on under the hood.

We are still passing the slow as a parameter, and as a result, all requests should fail. After the 5th request, the gem calculates the error rate, and we get above the 50% threshold of failure. Therefore the circuit opens, and we stop querying the API and just default to the nil answer. That's why it stops logging the querying message and its status.

Watching it recover 📈

The beauty of using circuit breakers is that we avoid pursuing a failure service if we already know that it is unstable, but beyond that, we check back to see if it recovered already.

Let's now tweak our inputs to force the instability and then force a fast response.

To simulate the API recovered, we are going to change the speed parameter passed down. So we start with whatever we get from the query string, but after some requests, we change that to be fast. For example:

answers = Array(0..9).map do |i|

sleep(1)

puts "Request number #{i+1}"

speed = 'fast' if i > 3

TargetClient.new(speed: speed).call

end

In the app side of things we are keeping time_window as 5 seconds, so the interval observed is the same. However, let's decrease volume_threshold to 2, so we need to get at least 2 requests to calculate the error rate. Finally, let's override sleep_window to be 2 seconds (which by default. waits 300 seconds).

So whit a shortersleep_window we should expect the circuit will get back to the API sooner. That new configuration should look like the following:

@circuit = ::Circuitbox.circuit(

:target_server,

exceptions: [HTTP::TimeoutError],

time_window: 5,

volume_threshold: 2,

sleep_window: 2

)

After calling our endpoint again, we get null on every answer besides the last one.

We can break down the flux of our requests as:

- The first 4 requests happen within the 5 seconds window, the gem realises we hit our threshold. Therefore, it opens the circuit.

- Our next attempt to request the API will pass

speed=fastas we set to do that ifi > 3. - Because the circuit is open all efforts during the

sleep_windowwill be short-circuited and returnnullimmediately. - After some time the

sleep_windowceases and closes the circuit. That makes the following request to reach the API again, and as we passedspeed=fastso the request will succeed.

The actual logs tell us that story.

And that is awesome 🥳! We were able to simulate the whole process of querying an API in a bad state, opening the circuit and querying it back once everything is good!

Conclusions 🌯

A circuit breaker is a pattern that helps us to avoid catastrophes. We manage to make our applications to fail gracefully, and therefore they end up being more resilient. It helped me in past experiences on Java and Ruby projects, but there are many implementations on GitHub for different languages as well.

I'm curious about your thoughts, how do you all avoid catastrophes? Have you ever tried circuit breakers?

Let me know what you think about this post on twitter!